Image acquisition

Hint

The operating environment and software and hardware configurations are as follows:

- OriginBot robot(Visual Version/Navigation Version)

- PC:Ubuntu (≥20.04) + ROS2 (≥Foxy)

Function introduction

Image acquisition is an indispensable basic function in vision development, users can interact with each frame of data by reading each frame of the image, and can also use various traditional vision algorithms to process each frame of the image on the basis of reading the data. Therefore, the function package is used to solve two problems, one is to help users obtain specific frame image data, and the other is to help developers learn the use of various image data in RDK X3.

Function runs

Compile the feature pack

take_pictures_node Function Pack is a stand-alone feature pack, and users can use the following commands to ensure the proper operation of the function before running.

$ cd /userdate/dev_ws

$ colcon build --packages-select take_pictures_node

$ cp -r /opt/tros/lib/mono2d_body_detection/config/ .

$ cp -r /opt/tros/lib/hand_lmk_detection/config/ .

$ cp -r /opt/tros/lib/hand_gesture_detection/config/ .

Get image frame data

There are two ways to start this function, one is to start the node separately for ros2 run, which can manually specify how many images to get, and the second is to start the launch file and continuously obtain image frames;

Method 1:ros2 run

Open two terminals and execute in the first terminal

Start the camera node in the second terminalMethod 2:ros2 launch

Start through the launch file, because the number of images to be obtained is set by default in the launch file, the machine will automatically obtain the picture after execution, and you can see the picture corresponding to the camera by opening the IP:8000 web page.

Description of the routine

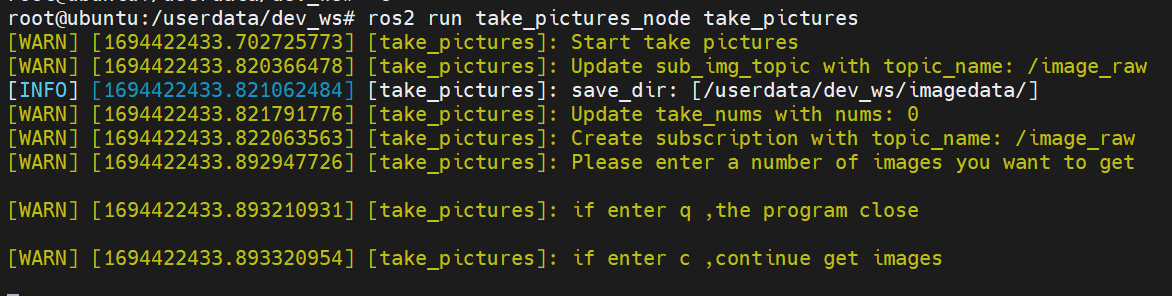

Taking ros2 run as an example, after starting two terminals, the result is shown in the following figure.

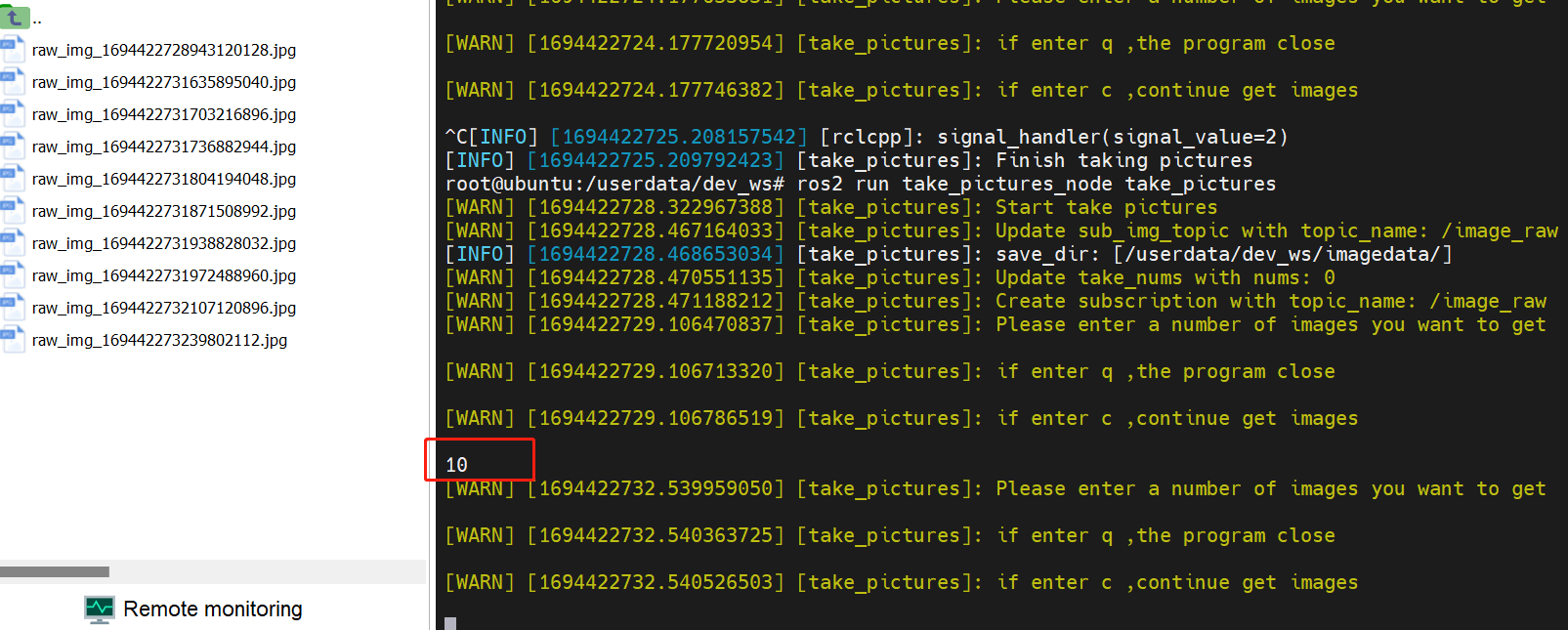

At this point, just write as many data frames as you want to get into the terminal, and the program will automatically save the image to /userdata/dev_ws/imagedata/, if there is no path, the path will be automatically created, as shown in the figure below.

At this point, just write as many data frames as you want to get into the terminal, and the program will automatically save the image to /userdata/dev_ws/imagedata/, if there is no path, the path will be automatically created, as shown in the figure below.

The image looks like this.

The image looks like this.

The images obtained by executing the following command are in rgb data format. In addition, the feature pack provides images in nv12 format for selection.

The images obtained by executing the following command are in rgb data format. In addition, the feature pack provides images in nv12 format for selection.

# First terminal

$ ros2 launch originbot_bringup camera_internal.launch.py

# Second terminal

$ ros2 run take_pictures_node take_pictures --ros-args -p sub_img_topic:=/hbmem_img --ros-args -p take_nums:=0

Description of key modules

In the code implementation, you need to pay attention to hbm_img_msgs::msg::HbmMsg1080P and nv12, nv12 and rgb conversion, as well as rgb and opencv format processing.

void TakePicturesNode::saveHbmImage(

hbm_img_msgs::msg::HbmMsg1080P::SharedPtr image_msg) {

RCLCPP_INFO(rclcpp::get_logger("take_pictures"),"saveHbmImage start");

auto image_nv12 = Hbmem2Sensor(image_msg);

char *buf_src = new char[image_msg->data_size];

cv::Mat mat_src = cv::Mat(image_nv12.height * 1.5, image_nv12.width, CV_8UC1, buf_src);

cv::Mat mat_dst = cv::Mat(image_nv12.height, image_nv12.width, CV_8UC3);

cv::cvtColor(mat_src, mat_dst, cv::COLOR_YUV2BGR_NV12);

auto image_rgb = NV122RGB(image_nv12,mat_dst);

cv_bridge::CvImagePtr cv_ptr = nullptr;

cv_ptr = cv_bridge::toCvCopy(image_rgb, sensor_msgs::image_encodings::RGB8);

// cv::Mat frame_gray;

// cvtColor( cv_ptr->image, frame_gray, cv::COLOR_BGR2GRAY);

cv::Mat frame_rgb = cv_ptr->image;

std::string fName = save_dir_ + "/raw_img_" +

std::to_string(image_rgb.header.stamp.sec) +

std::to_string(image_rgb.header.stamp.nanosec) + ".jpg";

cv::imwrite(fName, frame_rgb);

}